What is a Cognitive Insight Model (CIM)?

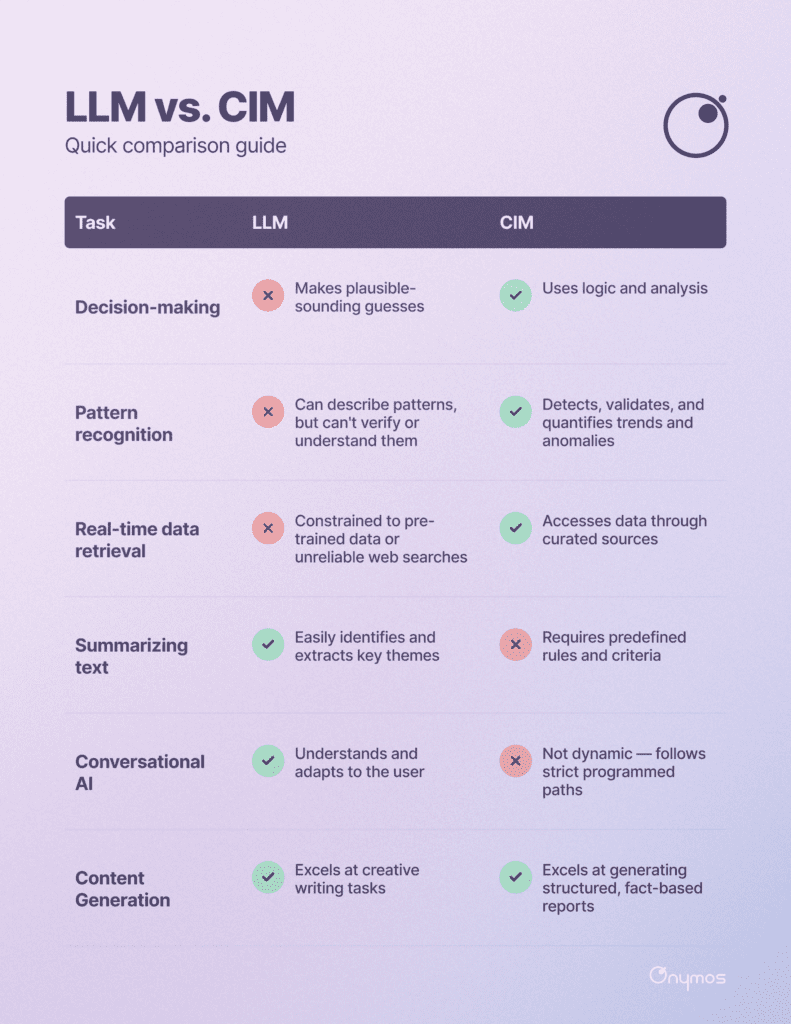

AI is the biggest thing in tech — maybe the biggest thing in anything, right now. But when most people talk about “AI,” they’re talking about Large Language Models (LLMs). Not to minimize them or undersell their capabilities, but these LLMs (like ChatGPT, Claude, and DeepSeek) are really just sophisticated text generators. They use context to predict what words to use in response to your inputs and even their own outputs. A Cognitive Insight Model (CIM) uses some of the same technology as an LLM, but it’s designed to generate real insights, not just “sound” like it is.

To understand CIMs better, we should understand LLMs, too.

Large Language Models don’t know what you’re talking about

A “bank” can refer to a financial institution, or it can refer to a slope beside a river (among other things). If you ask an LLM to give you a list of banks with the best high-yield savings accounts, it will identify the probability you’re referring to a riverbank is pretty slim and give you the contextually relevant answer you want.

It doesn’t really understand what a bank is (the “financial institution” kind or the “slope beside a river” kind). It just knows that certain combinations of words appear near each other in certain contexts and tries to generate text that pattern matches its training data. In this case, depending on that training data, let’s say it’s from Reddit, it might list banks that often appear in comments on posts about high-yield savings accounts.

The problem is that training data can be incomplete, outdated, biased, or totally wrong. This is where hallucinations come from: when LLMs invent false but plausible-sounding answers because they’re just attempting to generate the most statistically likely combination of words.

Sometimes, even calling these kinds of answers “plausible-sounding” is generous, like when Google’s AI Overview told users “they could use glue to stick cheese to pizza and eat one rock per day.”

How a Cognitive Insight Model works

CIMs are designed to approach their tasks analytically, not probabilistically. A CIM actually interprets data using structured, rule-based logic. It doesn’t rely on probability-driven text prediction, like an LLM.

If we asked a CIM the same question about high-yield savings accounts we, first of all, would probably be using some kind of specialized financial insights platform rather than a generalist one like ChatGPT. Specificity is the cost of accuracy.

Next, the CIM would retrieve real-time data from defined sources (like a proprietary API specifically for this task), programmatically filter and assign values to that data based on specific business rules, and finally present the user with a ranked list of banks.

Where is all of our new knowledge?

In a conversation with Dario Amodei, the CEO of Anthropic, podcaster Dwarkesh Patel asked him the following: “One question I had for you while we were talking about the intelligence stuff was, as a scientist yourself, what do you make of the fact that these things have basically the entire corpus of human knowledge memorized and they haven’t been able to make a single new connection that has led to a discovery?”

When it comes to why LLMs haven’t been able to do this independently, it hopefully seems obvious to you now (they’re not designed for insights in the first place). LLM-generated insights, when they happen, and to whatever degree they happen, are just byproducts of pattern matching text.

But why aren’t CIMs discovering things independently?

AI still needs humans (that’s probably a good thing)

Well, CIMs are still reliant on human-defined rules and inputs, including what data they can access, how they access it, and what they can do with it. Some CIMs are already more “agentic” than others, and as the technology improves, a CIM’s ability to test hypotheses or explore possibilities will, too.

But it’s crucial to highlight that when talking about the limitations of AI models I used the word “independently,” because they have helped humans make “new connections,” the same kind Patel is talking about. It’s just that they still need humans to help them do it. It’s a reciprocal relationship.

For example, the deep learning model AlphaFold 3 is very literally revolutionizing how biochemists and other scientists develop new disease-fighting drugs. It works by analyzing how the “billions of molecular machines” inside us interact with each other.

In other parts of healthcare and biotechnology, CIMs specifically are increasingly used to help doctors and researchers identify the biomarkers of disease or filter decades of patient records for relevant data.

When to use a Cognitive Insight Model

It’s very likely your enterprise’s data is hiding more data within itself. Getting it out manually is costly and time-consuming, and even then, humans alone might not be able to do it effectively. This is where CIMs shine. And CIMs, LLMs, and other kinds of AI models aren’t mutually exclusive. In practice, using them together can be the most effective way to solve real-world problems.

At Onymos, we’re building CIMs for precision medicine, pharmaceutical, and biotech enterprises to hyperautomate their document processing workflows. These models not only make processing faster and more efficient, but they can also derive deeper insights from the tens of thousands of patient profiles, TRFs, and other documents they intake.

We’re also innovating CIMs for Edge AI applications in IoMT (Internet of Medical Things) to support next-gen smart devices.

If you think CIMs can transform your processes or connected devices, you’re right! And we can help you. Analytical, semi-autonomous AI models and agents (like CIMs) are becoming must-haves for industries like healthcare that process so much sensitive — and potentially life-saving — data. Reach out to our team for all the details and to see a CIM in action.