ChatGPT Convos Went Public and Healthcare Should Pay Attention

For the last few months, your ChatGPT conversations might have been showing up in Google search results.

On July 30th, Fast Company was the first to report that Google was “indexing conversations with ChatGPT that users have sent to friends, families, or colleagues — turning private exchanges intended for small groups into search results visible to millions.”

Those chats were quickly pulled from the web.

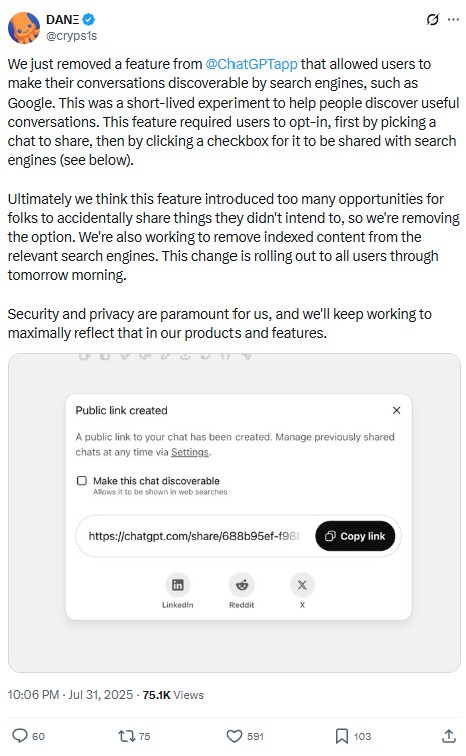

Open AI’s CISO, Dane Stuckey, tried to frame the whole incident as a “a short-lived experiment” on X. The response was mixed.

One user replied, “Good to see decisive movement on this, nice work!”

Another argued, “How is everyone in support of removing this? This was one of my favorite features that I thought was very beneficial for education on certain topics like nanotech and biotech.”

But it’s clear that a lot of people probably didn’t understand what they were doing when they checked a box to “make [their] chat discoverable.” And it’s possible that at least some of those logs, however briefly available they were, might have included actual doctors sharing their patients’ protected health information (PHI).

If that seems unlikely, it isn’t. In 2023, experts like Christian Hetrick were already sounding the alarm. In his article, “Why doctors using ChatGPT are unknowingly violating HIPAA” he wrote, in part: “Physicians are using ChatGPT for many things, mainly to consolidate notes. There has been a lot of focus on using AI to quickly find answers to clinical questions, but a lot of practical interest among physicians has also been in summarizing visits or writing correspondence that everybody has to do, but nobody wants to do. A lot of that content has protected health information in it.”

In 2024, over 40% of all reported third-party data breaches occurred in the healthcare space, more than in any other industry.

Putting a patient’s PHI into ChatGPT is, functionally, just another kind of third-party data breach. Only the perpetrator is the user. This can be true even if only OpenAI themselves have visibility into these conversations, and not the entire Internet. But if a physician or laboratory technician did directly (and hopefully accidentally) opt in to sharing their chats…

Once that data is out, you can’t pull it back. Even if Google de‑indexes a link, cached versions and third‑party scrapers may already have it. And if a bad actor downloaded your conversation, it’s gone for good.

And you can be absolutely sure bad actors have been busy collecting all of that exposed PHI, whoever put it there. On the black market, health records command a far higher price than financial data. A single electronic health record can fetch $60 on the dark web, compared to about $15 for someone’s social security number or just $3 for credit card info. Criminals prize health data because of its richness (social, financial, and medical details).

But the lesson here isn’t that ChatGPT is inherently unsafe. It’s that consumer AI tools aren’t designed for protected data. Even if ChatGPT never lets Google index chats again, putting PHI or sensitive business information into a public AI is still a risk. Once it leaves your system, you’ve lost control.

AI without the compromise

If AI is going to transform healthcare, it needs to be able to do it without creating new compliance risks. That’s why we built Onymos Nucleus, an AI solution designed for secure, internal use in healthcare and life sciences environments.

Nucleus is made for HIPAA‑compliant, private deployment, where no data is sent outside your organization’s infrastructure. And Onymos’ own No-Data Architecture means zero visibility and retention. We don’t ever see or capture customer data.

With Nucleus, your team can summarize sensitive documents, generate reports, and search your org’s entire proprietary knowledge base for instant, source-linked answers.

The ChatGPT indexing incident is a reminder that consumer AI isn’t built for HIPAA. Onymos Nucleus is. Reach out to our team and see how.