AI Governance in Healthcare Starts With Data

The entire conversation around AI governance in healthcare is happening at the wrong level. Everyone’s asking:

- “Is this AI model biased?”

- “Can we explain its decisions?”

- “Is this vendor SOC 2 compliant?”

Those are important questions, but there’s another question almost nobody’s asking: “Why are we relying on systems that require trusting more and more vendors with our data in the first place?”

The uncomfortable truth is that most AI governance frameworks assume vendor promises of “security and compliance” are enforceable. They’re not.

The contract you sign is only as good as the vendor’s commitment to honoring it and their ability to actually secure what they’re promising to secure.

And, across healthcare, they’re not keeping things secure. Business associate breaches grew by an unprecedented 445% between Q1 and Q2 of 2025, jumping from affecting just over 1 million patients to compromising more than 6.3 million.

You can have the most rigorous AI ethics policy in the world. You can require bias testing, demand explainability, and mandate human oversight. But if your architecture requires sending sensitive data to third parties, you’re not doing effective AI governance. You’re outsourcing governance and hoping for the best.

The vendor layer problem.

Many healthcare organizations use AI tools and services that sit between them and the actual model provider. These kinds of vendor-hosted AI technologies are the industry norm.

Consider what actually happens when a physician uses a typical AI-powered medical scribe service:

- The doctor-patient conversation is recorded

- Audio is sent to the scribe service and queued for processing

- The scribe service processes it through OpenAI’s Whisper for transcription

- It sends the transcript through GPT-5 for clinical note generation

- Finally, it stores the audio file, transcript, and clinical notes on its platform and presents them to the physician through a dashboard for verification, EHR integration, or further editing

That’s not one vendor relationship. That’s a data pipeline with multiple custody transfers, multiple storage points, and multiple potential failure modes.

And if that scribe service gets acquired? Whoever buys them now owns recordings of the customer’s patient conversations. In the best-case scenario, the customer would approve of the buyer or be able to delete or export their data before the ownership transfer. In the worst-case scenario, they may have to get their state to sue the new owner.

But it gets even more complex.

That medical scribe service isn’t just storing its customers’ data. It’s also managing who can access it. When you add an intermediary vendor between you and the AI you use, you’re not just adding one more entity to your software supply chain. You’re adding an entire workforce, including shadow vendors (your vendor’s vendors), who you’ve never vetted and can’t audit.

So, every vendor layer compounds your risk. Not linearly. Exponentially.

The No-Data Architecture alternative

But you don’t actually have to let vendors sit between you and your AI (and your data).

Onymos’ AI applications designed with No-Data Architecture are optimized to deploy inside infrastructure you control, without Onymos ever having access to sensitive information (e.g., it sees no data, saves no data).

This doesn’t mean you can’t or won’t use external services, but those become choices you make directly.

If your risk tolerance demands it, No-Data Architecture makes it possible to go fully private with self-hosted AI models and dedicated infrastructure.

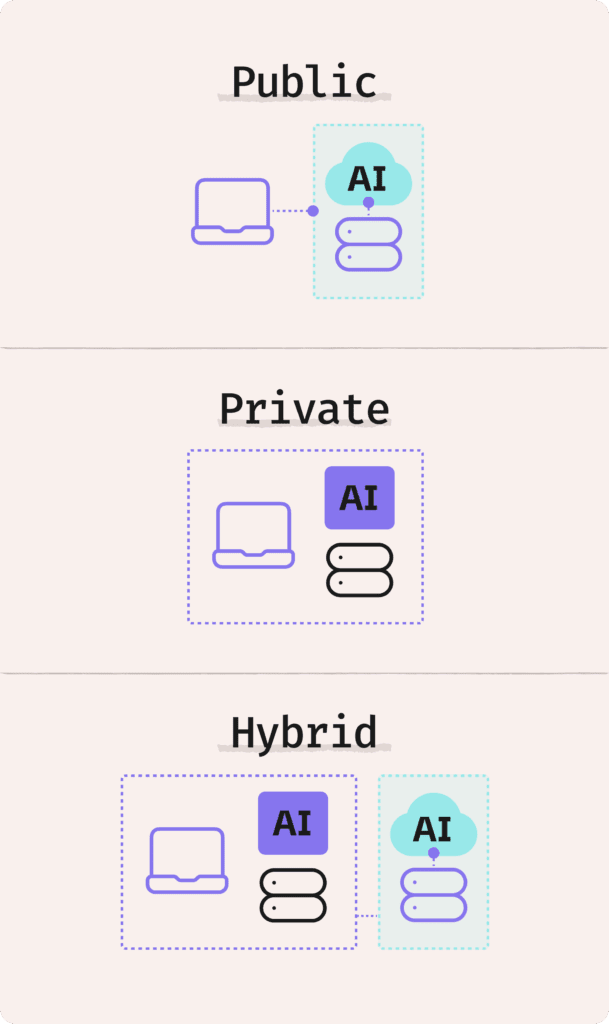

That’s one kind of deployment option, but there are two others to consider:

- In a public cloud, you control your own tenancy on shared infrastructure from providers like AWS, Azure, or GCP. It delivers scalability and elasticity while keeping data access tightly controlled.

- A hybrid model blends public and private. It uses the public cloud for scalable, high-volume workloads while keeping sensitive data and processes protected within private infrastructure

Regardless of which deployment option you choose, No-Data Architecture makes it so third-party data breaches through Onymos are impossible, because it never has your data.

It ensures regulatory compliance is always an internal control problem, not a vendor management problem.

And it puts you in complete control of your own governance processes, including data handling, retention, portability, and access.

The real cost of getting your AI governance structure or AI implementation wrong

In 2024, 41% of third-party breaches affected healthcare organizations.

Meanwhile, AI adoption across the industry is accelerating. Clinical documentation, diagnostic support, administrative automation… AI is touching more sensitive data every day.

If you’re leading AI governance in healthcare, here’s what matters:

- Don’t treat vendor security as a solved problem.

Every vendor relationship is a risk surface. Minimize them.

- Question architectural assumptions.

Does your AI deployment require data to leave your environment? Or is that just the default because that’s how the vendor built it?

- Prioritize vendors who don’t need access.

When you’re evaluating AI solutions, ask: “Where does our data go?” If the answer is “to us, we’ll keep it safe,” ask why they need it at all.

- Design for governance, not policy.

The best compliance frameworks are the ones that make violations difficult by design, not by policy.

AI at Onymos

At Onymos, we built our AI-powered workflow automation and knowledge management solutions on the principle that the most trustworthy vendor is the one that never needs you to trust them at all.

That’s why Cloudwave, one of our software partners, turned to Onymos DocKnow, our AI document processing platform, for its projects with the United States government. Federal agencies face many of the same challenges healthcare organizations do. They need AI capabilities without creating vendor dependencies that expose sensitive data.

Ready to explore what No-Data Architecture means for your AI strategy? Reach out to the Onymos team to learn more about DocKnow or our individual solutions like Nucleus, a private LLM and embedded knowledge base.

Not quite ready yet? Review our FAQ on AI governance and No-Data Architecture below.